Linear regression Learning Statistics with R

Specifically, there is the notion of regression depth, which is a quality measure for robust linear regression. Statistically speaking, the regression depth of a hyperplane \(\mathcal{H}\) is the smallest number of residuals that need to change the sign to make \(\mathcal{H}\) a nonfit. This definition also has convenient statistical properties.

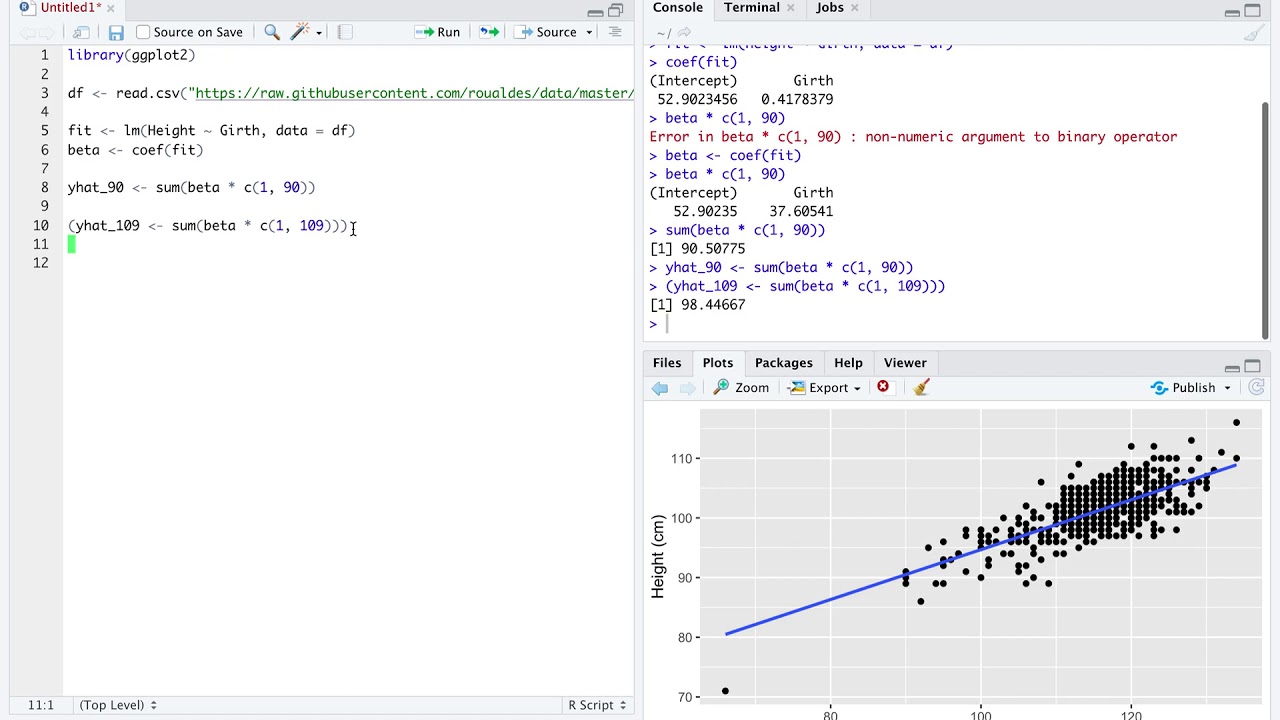

Simple Linear Regression in R, predictions YouTube

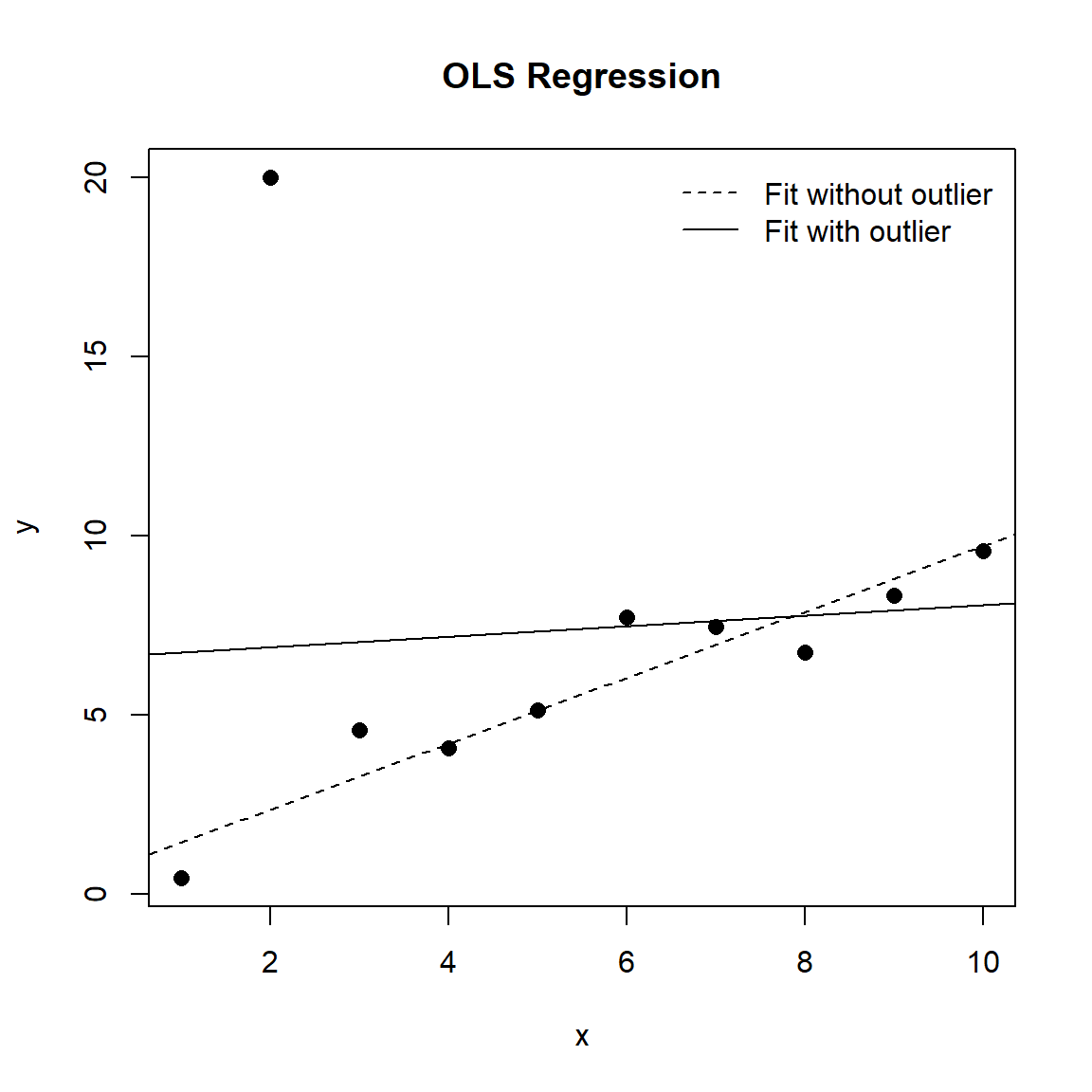

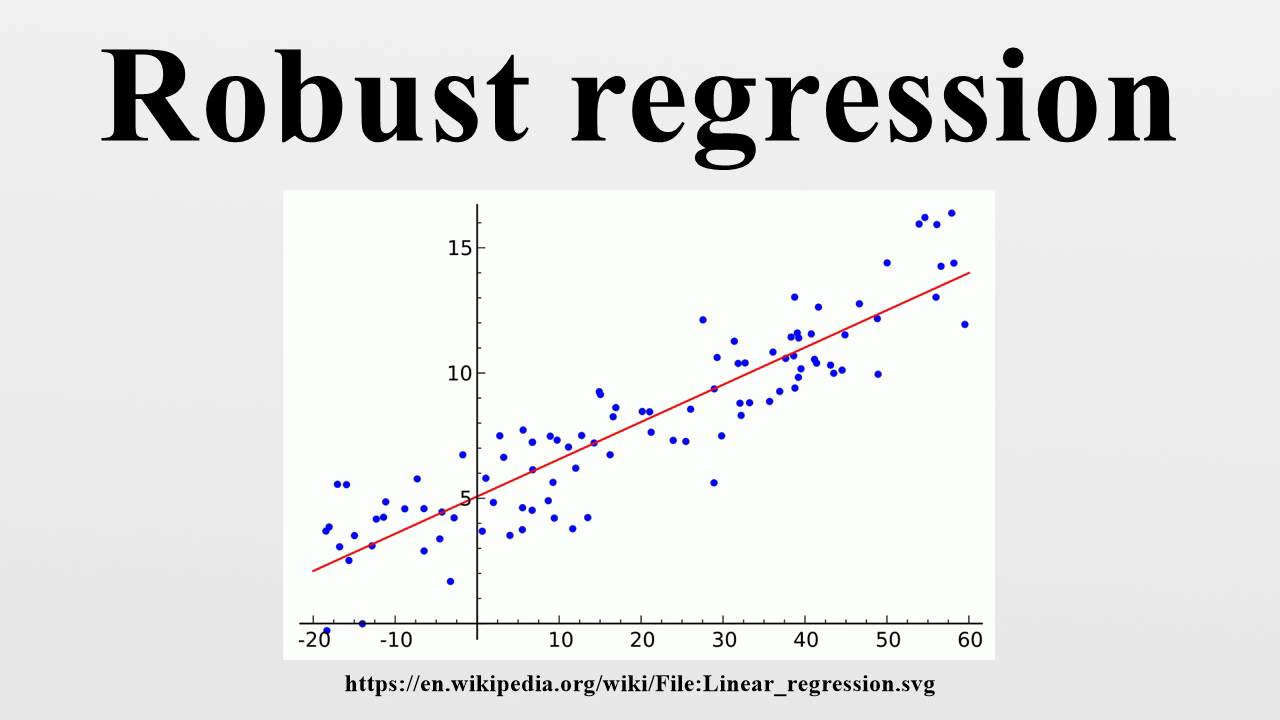

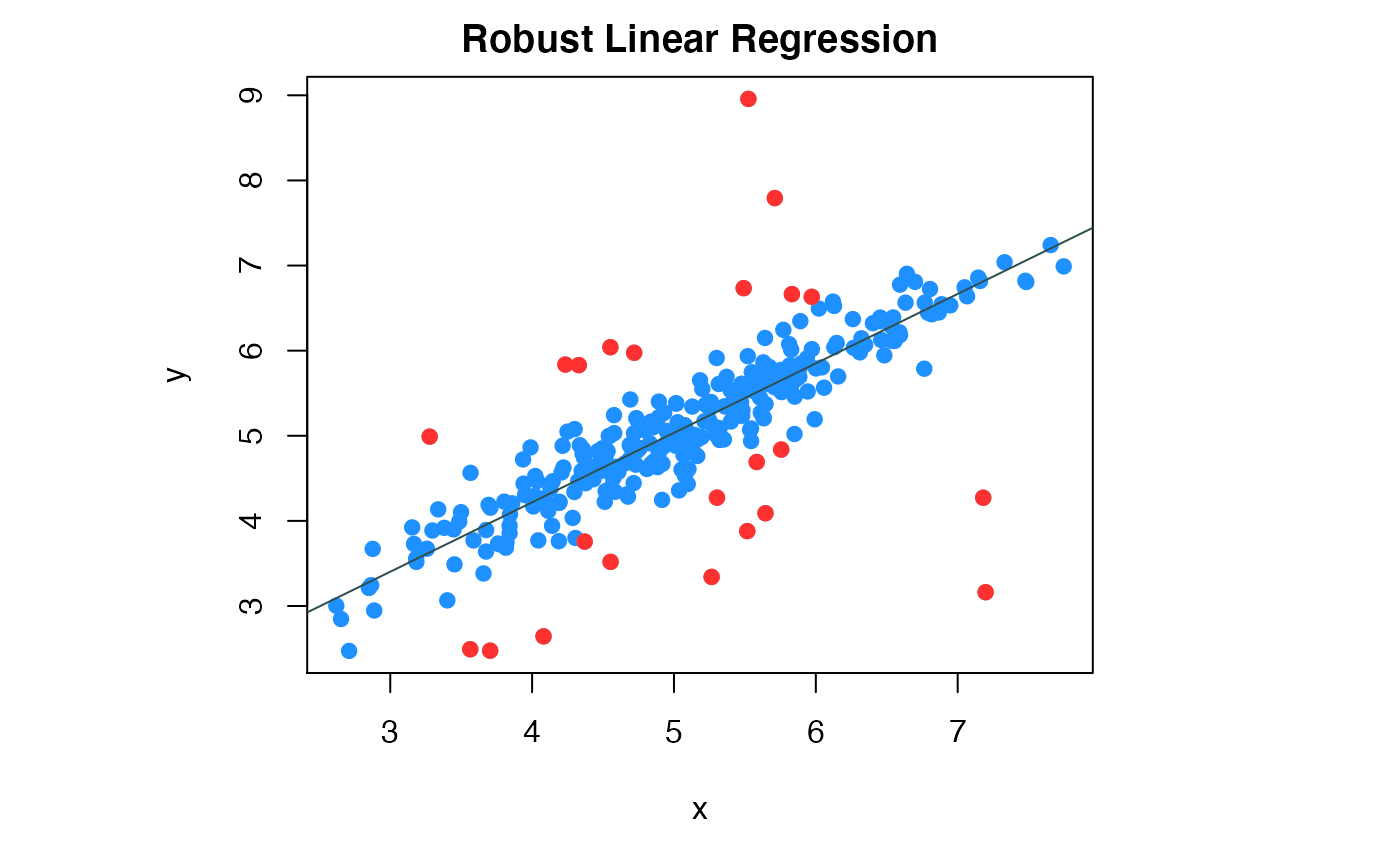

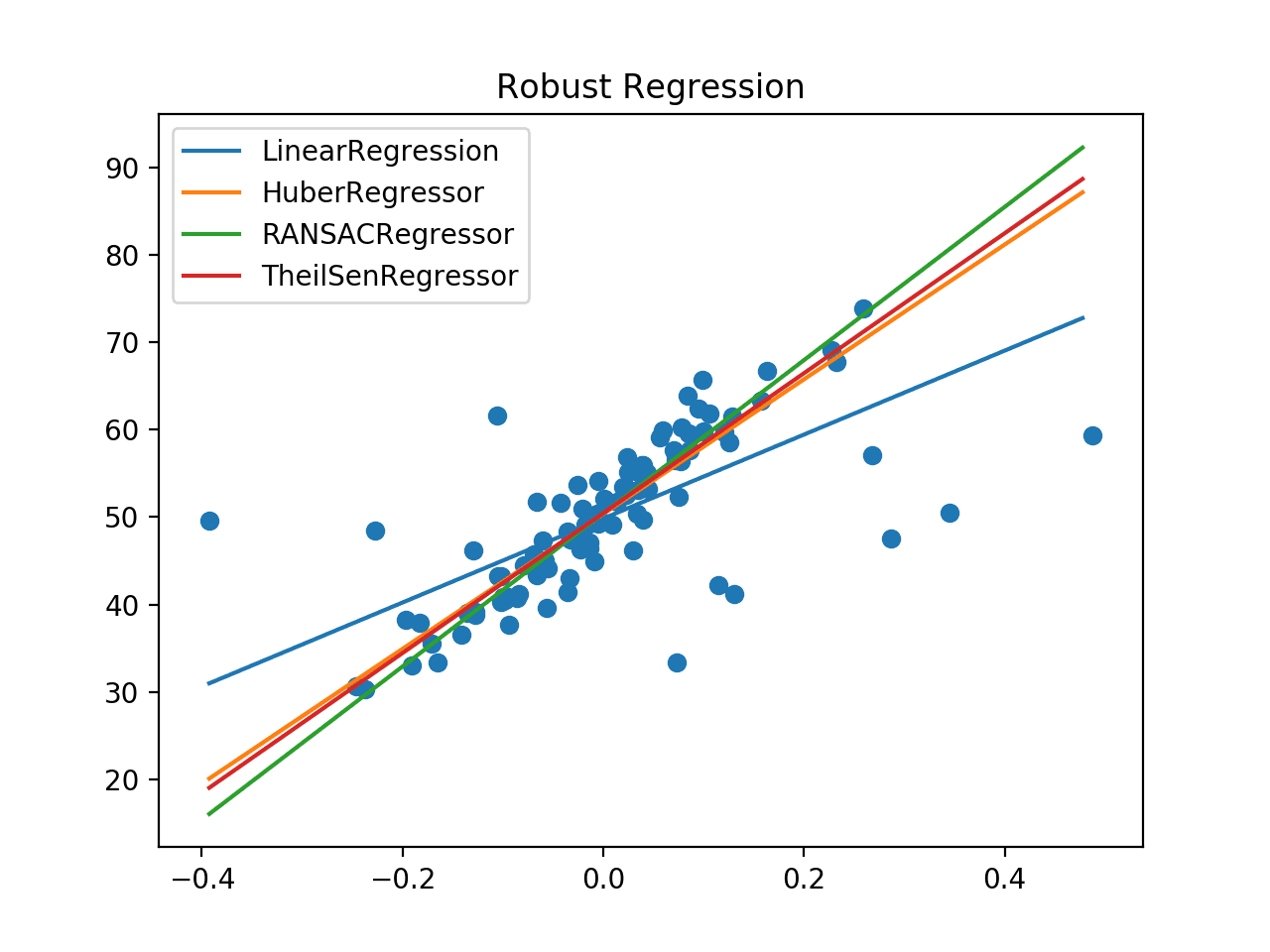

Robust regression methods provide an alternative to least squares regression by requiring less restrictive assumptions. These methods attempt to dampen the influence of outlying cases in order to provide a better fit to the majority of the data. Outliers have a tendency to pull the least squares fit too far in their direction by receiving much.

Robust Regression Charles Holbert

Linear regression has been one of the most important statistical data analysis tools. Given the independent and identically distributed (iid) observations , i = 1,., n, in order to understand h. Robust linear regression: A review and comparison: Communications in Statistics - Simulation and Computation: Vol 46 , No 8 - Get Access

Linear Regression model sample illustration Download Scientific Diagram

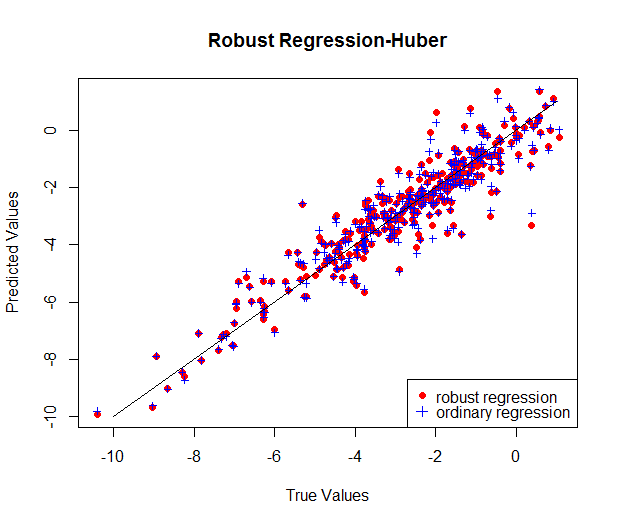

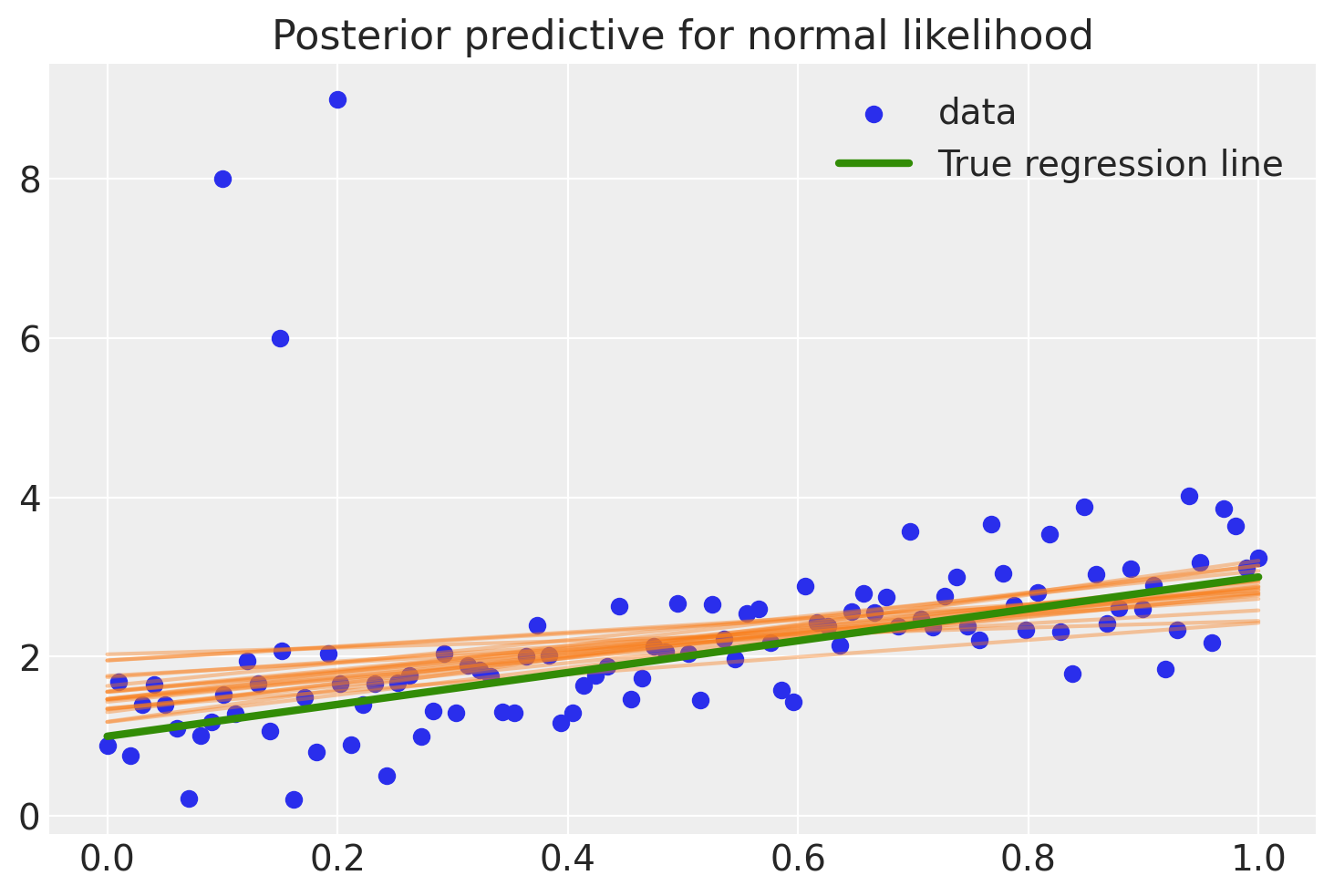

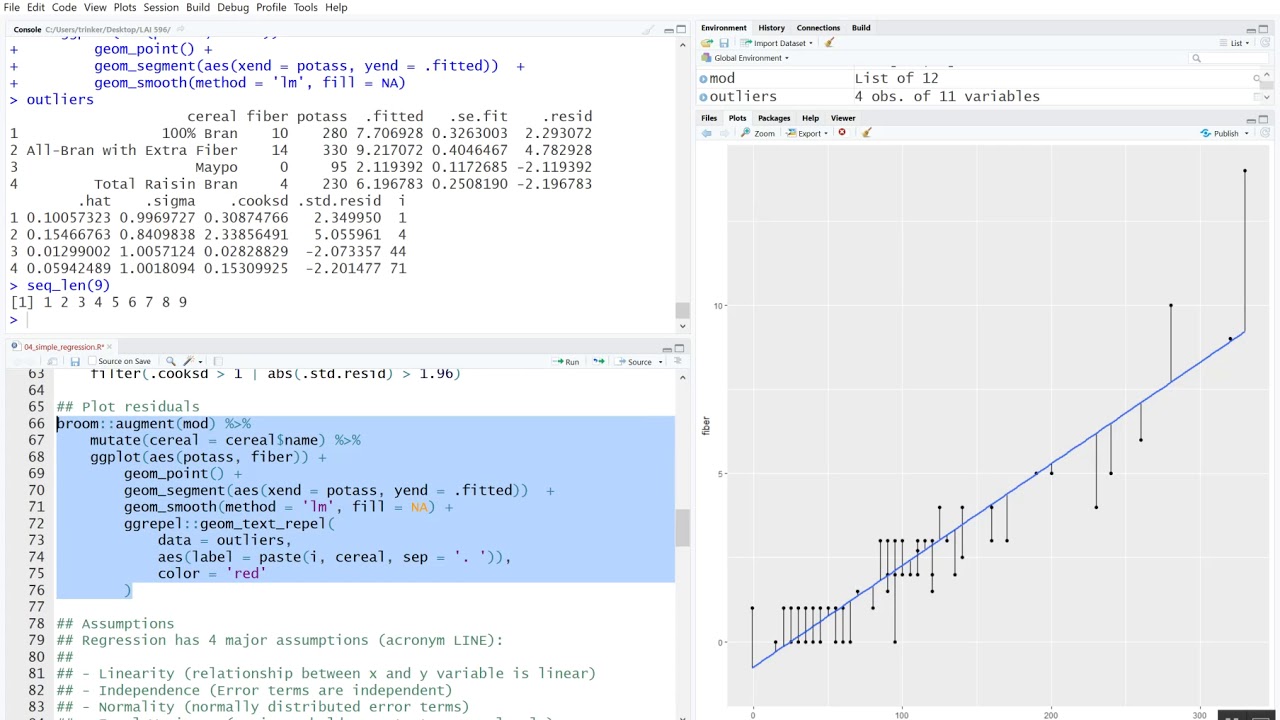

Plots. A useful way of dealing with outliers is by running a robust regression, or a regression that adjusts the weights assigned to each observation in order to reduce the skew resulting from the outliers. In this particular example, we will build a regression to analyse internet usage in megabytes across different observations.

Valence Analytics R Robust Regression and Estimation of Model Performance

As I wrote above, by default, the type argument is equal to "HC3". Another way of dealing with heteroskedasticity is to use the lmrob () function from the {robustbase} package. This package is quite interesting, and offers quite a lot of functions for robust linear, and nonlinear, regression models.

Robust regression YouTube

2014). This is not a robust method per se, but allows for median-based estimation. The function supports only one grouping level but allows the correlation structure of the random effects to be specified. For robust estimation of linear mixed-effects models, there exists a variety of specialized implementations in R, all using different.

GLM Robust Linear Regression — PyMC example gallery

Overview. R provides several methods for robust regression, to handle data with outliers. This tutorial shows how to fit a data set with a large outlier, comparing the results from both standard and robust regressions. This also serves as a comparison of plotting with base graphics vs. ggplot2, and demonstrates the power of using ggplot2 to.

Using RANSAC for Robust Regression RCraft

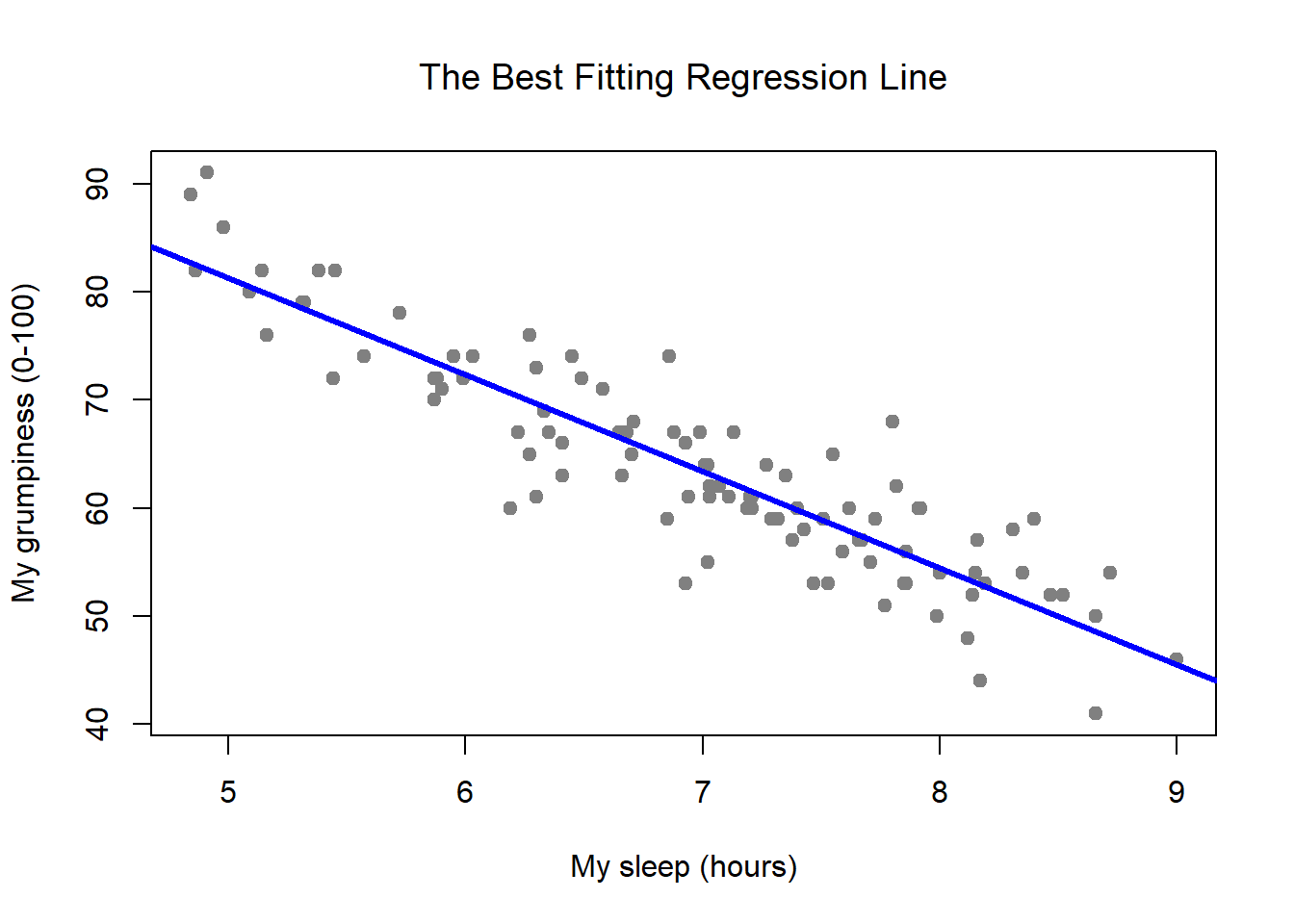

Feb 1, 2022. 8. The idea behind classic linear regression is simple: draw a "best-fit" line across the data points that minimizes the mean squared errors: Classic linear regression with ordinary least squares. (Image by author) Looks good. But we don't always get such clean, well behaved data in real life.

RobMixReg an R package for robust, flexible and high dimensional mixture regression

Robust Linear Models. PJ Huber. 1973, 'The 1972 Wald Memorial Lectures: Robust Regression: Asymptotics, Conjectures, and Monte Carlo.' The Annals of Statistics, 1.5, 799-821. R Venables, B Ripley. 'Modern Applied Statistics in S' Springer, New York,

Multiple linear regression made simple Rbloggers

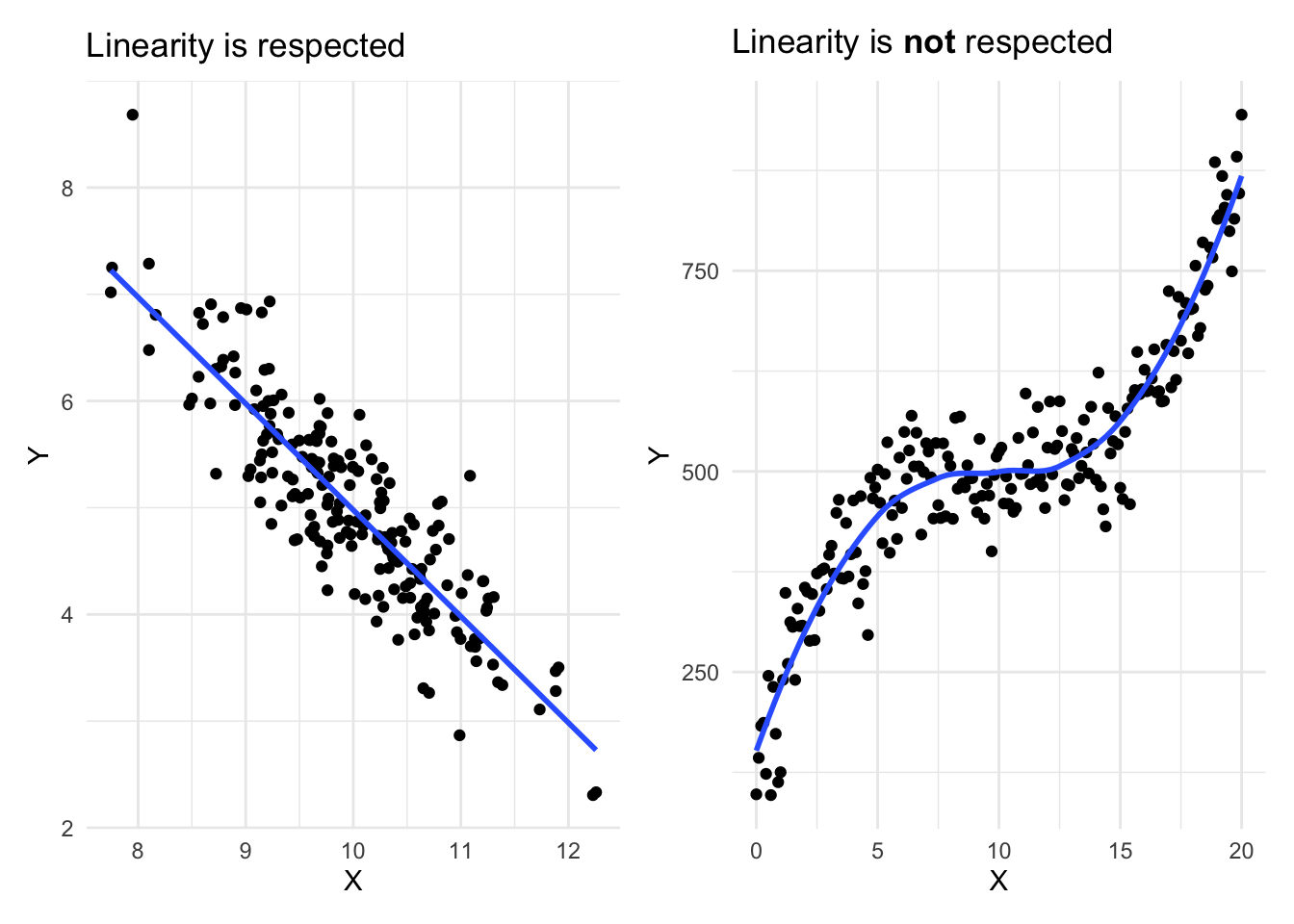

This lesson provides an introduction to some of the other available methods for estimating regression lines. To help with the discussions in this lesson, recall that the ordinary least squares estimate is. β ^ OLS = arg. . min β ∑ i = 1 n ϵ i 2 = ( X T X) − 1 X T Y. Because of the alternative estimates to be introduced, the ordinary.

Basic Linear Regressions for Finance Rbloggers

Details. By default, the lmRob function automatically chooses an appropriate algorithm to compute a final robust estimate with high breakdown point and high efficiency. The final robust estimate is computed based on an initial estimate with high breakdown point. For the initial estimation, the alternate M-S estimate is used if there are any factor variables in the predictor matrix, and an S.

04 Simple Linear Regression in R YouTube

An object of class "lm_robust". The post-estimation commands functions summary and tidy. return results in a data.frame. To get useful data out of the return, you can use these data frames, you can use the resulting list directly, or you can use the generic accessor functions coef, vcov , confint, and predict.

Illustration of the robust model, parameterized by β on a linear... Download Scientific Diagram

Robust regression is an alternative to least squares regression when data are contaminated with outliers or influential observations, and it can also be used for the purpose of detecting influential observations.. John Fox, Applied regression analysis, linear models, and related models, Sage publications, Inc, 1997; See also. R documentation.

How to apply Linear Regression in R Rbloggers

The focus of this contribution was on robust linear regression methods for high-dimensional data. As in the low-dimensional case, there are two types of outliers that need to be taken care of: vertical outliers, which are outliers in the response variable deviating from the linear trend, and bad leverage points, which are outliers in the.

Linear Regression Infographics

Robust regression is a technique that can reduce the impact of outliers, violation of the distribution assumption and heterogeneity in variance. It should be noted that the linearity assumption is still needed for proper inference using robust regression. In this example, we will use the Stars dataset from the Robustbase package.

Robust Regression for Machine Learning in Python

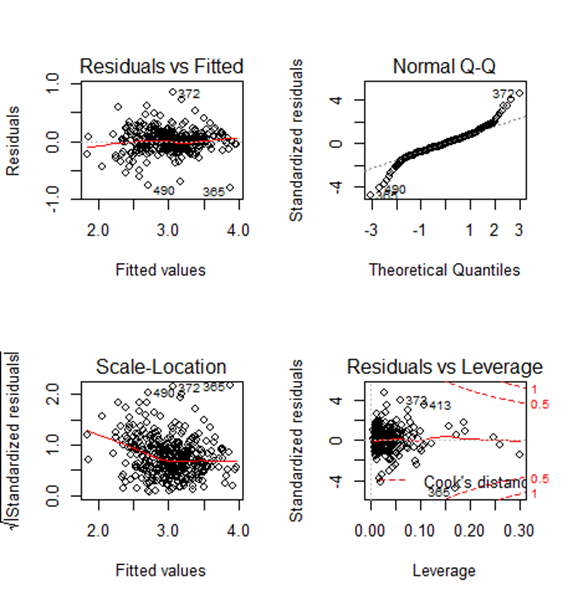

Step 2: Perform Ordinary Least Squares Regression. Next, let's fit an ordinary least squares regression model and create a plot of the standardized residuals. In practice, we often consider any standardized residual with an absolute value greater than 3 to be an outlier. #fit ordinary least squares regression model. ols <- lm(y~x1+x2, data=df)

- Ksi Vs Tommy Fury Time Nederland

- Wesley Jaar Van Je Leven

- Northrop Grumman Sperry Marine B V

- Georgiana The Duchess Of Devonshire

- Henk Poort En Floor Jansen

- Something New Something Blue And Something Borrowed

- Het Weer In Ulm 10 Dagen

- Average Time Of Half Marathon

- K3 Je Hebt Een Vriend

- برای رفع باد شکم چه بخوریم